Student researchers’ bionic arm wins grand prize at Sony competition

The summer research project shows promise to be low cost and low power

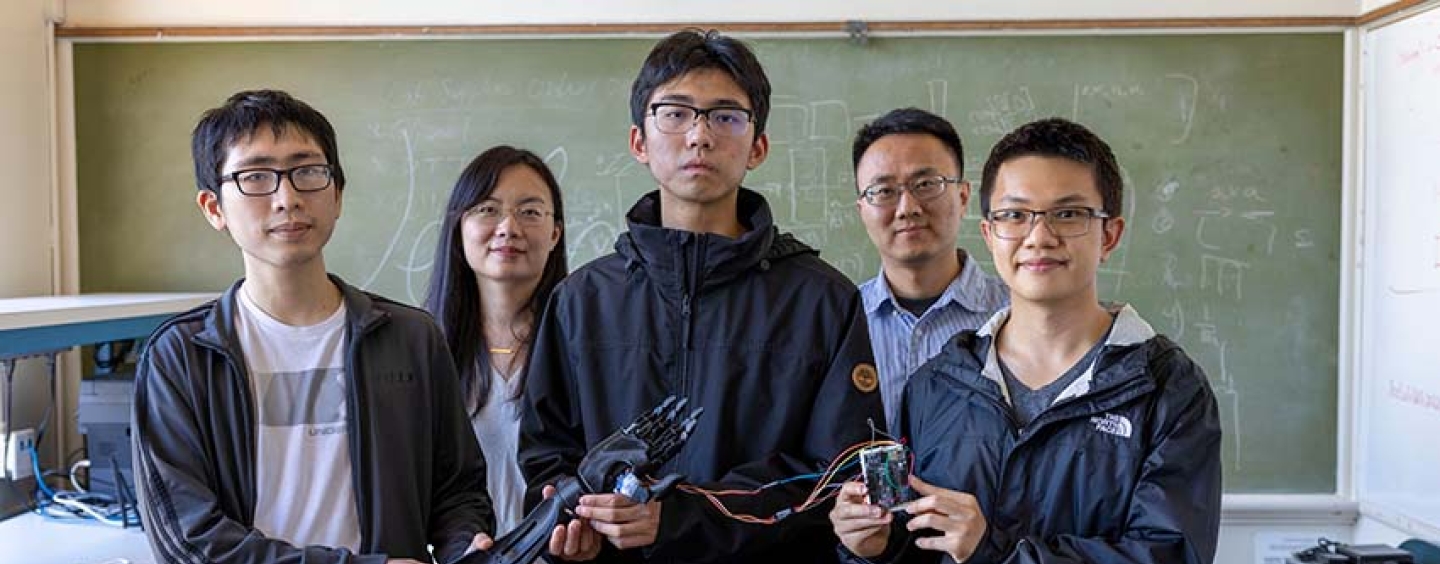

In June, five San Francisco State University research students, brought together by a shared interest in artificial intelligence, began collaborating on a summer engineering research project. Just 10 weeks later, the budding engineers earned one of three grand prizes in a virtual Sony-sponsored competition. Their winning project — a deep learning-based bionic arm — is a prototype for a prosthetic that could be used by patients after a stroke or amputation.

San Francisco State Assistant Professor of Computer Engineering Zhuwei Qin and Associate Professor of Computer Engineering Xiaorong Zhang mentored the research team of two SF State master’s students, two undergraduates and one local high school senior. Their project was among 49 submissions to the contest, competing against projects created by 500 international participants ranging in age and expertise.

“This project is unique for two reasons. The entire control system, for the first time, was implemented in a tiny edge device [Sony’s Spresense microcontroller] that can process electromyography signal in real-time,” Qin said. “We also introduced a deep-learning algorithm on the microcontroller, which is usually done on high-performance desktops, not on low-power devices.”

Building on the faculty’s earlier work, this new system collects muscle-generated electrical activity (electromyography signal) from a patient’s arm. The data is fed to the Sony microcontroller that uses a form of artificial intelligence called deep learning to predict and generate the patient’s desired arm gestures in real time.

Traditionally, deep-learning algorithms — computer models that can learn tasks done by humans — need an expensive high-performance desktop computer that requires high-power consumption and is not portable.

“[Deep learning-based systems] are way too expensive for a lot of people, especially because deep learning is very demanding,” said student researcher Jimmy Lu, a Lowell High School student. “Utilizing the computation for an embedded system with the Sony Spresense microcontroller, we could easily bring that price down.”

Applying the deep-learning algorithm to this new Sony microcontroller helped cut the costs and necessary power, generating an arm gesture in less than two seconds. Costs were also reduced by using a 3D printer to assemble the prosthetic arm.

For many of the student researchers, this was their first hands-on experience with artificial intelligence — and for most, it was their first research experience to boot. It was definitely an eye-opening experience, the students say.

“In the research lab, nobody knows how to solve the difficulty, even the professor,” fourth-year Computer Science undergraduate Zhenyu Lin said. “Because that is the research, right? You’re supposed to find the solution.”

The five students all brought different engineering expertise to the project and enjoyed working as a group and learning from each other. As much as they learned technical skills, they all noted the importance of teamwork and communication this summer, as well.

“It was definitely a two-way street in terms of learning. Although I am a graduate student, there are a lot of things, especially in the deep learning and machine learning, I feel that Zhenyu and Jimmy are way more knowledgeable about,” said second-year master’s student Philip Liang, pointing to his undergraduate and high school colleagues, respectively.

“Even if you don’t have a lot of experience in a certain field, you can always learn more about it,” Liang said. “So don’t give up on it just because you don’t know.”

Some of the students will continue on this project into the school year, working to optimize the robustness and reliability of the arm.

Visit the School of Engineering to learn more about research and academic programs.